Introduction

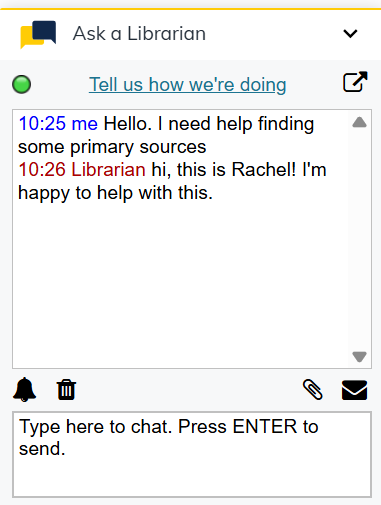

Ask a Librarian services are often the first point of contact between library users and library employees. This is especially true of Ask a Librarian’s chat service: the chat widget pops up on every page on the library website, making it an easy and accessible way for folks to get in touch with us, and many instruction librarians point out the widget as a resource when they work with students in a classroom setting. In FY2025, Ask a Librarian staff—20 full-time librarians and professional staff, and 18 graduate student employees—fielded 3,852 chat questions, over half of them requiring time-intensive reference or research support.

Although I’m now the coordinator for our chat service, I started out with the Ask a Librarian team as a graduate student employee in 2018, and I have really enjoyed watching the service evolve over the past seven years. As COVID-19 lockdowns reduced the presence of onsite reference desks, we saw usage of our virtual services (chat and email) jump dramatically, outpacing our in-person services in the years since. Before it permanently closed in May 2025, Ask a Librarian’s onsite reference desk primarily answered logistical, and directional questions (Where is the restroom? How do these printers work?) compared to the chat service, which took on the majority of research- and reference-focused questions. With that trend in mind, I embarked upon this assessment project with two guiding questions: (1) what do users need when they use our chat service, and (2) how can we adjust our chat service and staff training efforts to meet those needs.

Process and Methodology

Those two guiding questions were the backbone of the survey design process for my colleague Allyssa Bruce and I. There were so many questions we wanted to ask and pieces of data we wanted to collect about our chat users, but we were mindful that a shorter survey would encourage a higher response rate, and that some questions just wouldn’t fit within the frame of our assessment goals. With the guidance of the library’s Assessment Specialist, Craig Smith, we finalized an eleven-question survey that collected both quantitative and qualitative data. These questions fell into two categories, demographic and informational.

The demographic questions asked things like:

- How are you affiliated with the University of Michigan?

- How did you learn about the Ask a Librarian chat service?

The informational questions asked things like:

- What was your primary goal or reason for contacting the Ask a Librarian chat service?

- Did you get the information or help you needed during your chat session?

- Did the service provider understand what you needed?

The eleventh survey question asked respondents whether they would like to be entered into a random drawing for one of fifty $20 gift cards.

Next, to add the survey link to our chat widget, we worked with Bridget Burke, Front-End Developer & Accessibility Specialist. This was a critical part of the process for us. We wanted to hear from users who’d had an actual experience using our chat service—in other words, not just users passing through on the library website. We also wanted each survey response to be internally linked to that user’s chat transcript, so we could take a closer look at how the conversation they had might have informed their answers in the survey. Bridget was able to wrangle our widget’s JavaScript code to meet both of those goals. To see the survey link, users needed to begin a chat conversation by sending a message in the widget. If a user then clicked on the survey link, their unique GuestID (automatically and randomly assigned by our chat software) would attach to their survey response, allowing us to search the software’s back end to locate and review the corresponding transcript. This meant that survey responses would not be entirely anonymous; users were warned about the connection between their responses and their transcript before beginning the survey.

The survey went live on our chat widget in September 2024.

Findings

Between late September and mid-December 2024, the Ask a Librarian chat service fielded 1,191 questions from library users, and 84 users completed the survey. This was a 7% response rate. We were hoping for a response rate closer to 10% for a more representative snapshot of our target audience, but we were still able to draw key conclusions from this smaller set of data.

Here is a summary of our findings:

- The majority of survey respondents were students. 25% of respondents were undergraduate students, followed by staff at 23.9%, and graduate students at 20.5%. These numbers are fairly consistent with overall chat service user demographics.

- The majority of survey respondents contacted us for help with research. “Research help” was the most-selected category for this question (39.1%), followed by “Borrowing and lending services” (28.7%) and “Technology help” (11.5%).

- Not everyone had used Ask a Librarian before. 45% of survey respondents were repeat patrons, while 43% were new to the service. (The remaining respondents couldn’t remember whether they had chatted with us before or not.)

- Most folks learn about us from the pop-up chat widget on the library website. This accounted for 65% of survey respondents! In contrast, word-of-mouth referrals—like library instruction sessions and professors or instructors—only accounted for a combined 13% of survey respondents.

- The majority of respondents were happy with our chat service! 83% reported that they received the information they needed, and another 83% reported that they were either “satisfied” or “very satisfied” with Ask a Librarian staff.

However, there are also some factors that may have influenced this data:

- “Other” category as a catch-all. Three of our multiple choice questions provided “Other” as a possible answer. Although this was a necessary inclusion in order to capture answers not represented by specific categories, “Other” may have also acted as a catch-all. Because it’s such a broad term, it could be referring to any number of things, and it could especially be referring to something more accurately captured by another category. A user self-reporting that they sought help with “Other” might have needed assistance with a broken link, which really falls under “Technology help.” These are not necessarily intuitive distinctions from a user perspective, and it’s likely that they skewed our results.

- Question and answer orientation. After we finished gathering 2024 responses for the assessment project, we realized an oversight in our survey: for our question asking about user satisfaction, we had unknowingly reversed the order of possible answers. Most multiple choice lists began with the most positive option (“Yes” or “Very satisfied”) and then went down the line to the most negative option (“No” or “Very dissatisfied”); for this particular question, however, the order was swapped. If respondents had gotten used to the pattern of most positive options first, they may have unintentionally selected “Very dissatisfied,” again skewing our results.

Conclusions and Next Steps

To build onto the initial, data-driven takeaways shared above, we are now moving into the qualitative coding phase of the project and shifting our focus to the chat transcripts. We already developed a codebook, anonymizing the transcripts to remove identifying information for both users and Ask a Librarian staff. Once coded for themes, these transcripts will provide further context for the survey responses. The survey link is still active in the Ask a Librarian chat widget and we are still receiving responses. Beyond formal assessment purposes, this allows us to address user problems as they arise or—more happily!—to send positive feedback along to Ask a Librarian service providers as encouragement. One of my favorite parts of this project has been seeing the Ask a Librarian team at work and getting a firsthand look at the knowledge and empathy they share with our chat users on a regular basis.

In that vein, it’s been encouraging to learn that the majority of users are happy with our chat service. Most of the rare negative responses we received were from U-M alumni or unaffiliated users, folks who were unhappy with the library’s access policies for online resources and databases. Even before and beyond this formal assessment, Ask a Librarian team members have often received that sort of anecdotal feedback from non-affiliates, so the data trends among that group of respondents was largely expected. We have also noticed specific policies—such as those relating to interlibrary loan or borrowing privileges—that we need to clarify with our team, especially our student employees. Satisfyingly, this connects right back to one of our initial guiding questions (how can we improve staff training efforts to meet user needs?), and it feels especially relevant as the Ask a Librarian service coordinators begin an overhaul of our student employee training program.